Rethinking Research Assessment for the Greater Good: Findings from the RPT Project

The review, promotion, and tenure (RPT) process is central to academic life and workplace advancement. It influences where faculty direct their attention, research, and publications. By unveiling the RPT process, we can inform actions that lead towards a greater opening of research.

Between 2017 and 2022, we conducted a multi-year research project involving the collection and analysis of more than 850 RPT guidelines and 338 surveys with scholars from 129 research institutions across Canada and the US. Starting with a literature review of academic promotion and tenure processes, we launched six studies applying mixed methods approaches such as surveys and matrix coding.

So how do today’s universities and colleges incentivize open access research? Read on for 6 key takeaways from our studies.

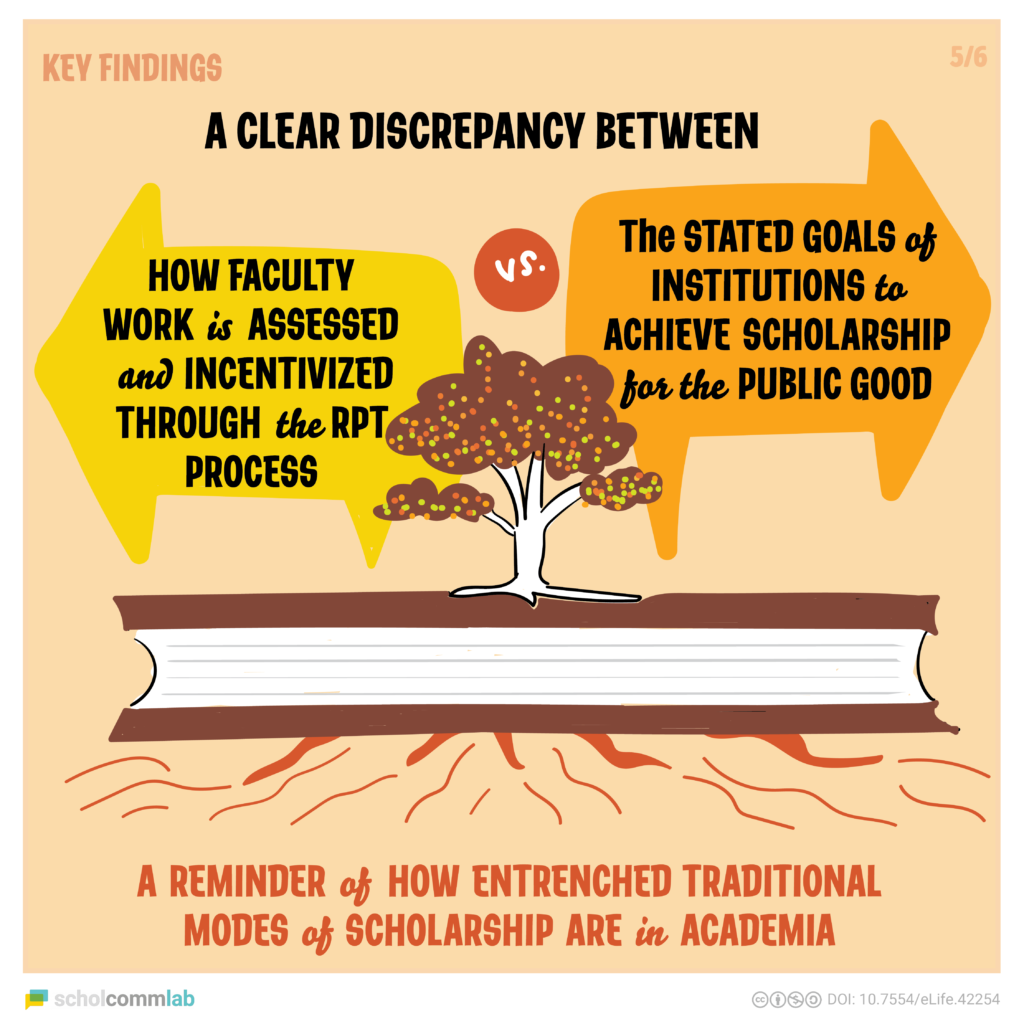

1. Faculty assessments do not align with institutional goals

In a study published in eLife in 2019, we assessed the degree to which RPT documents included guidelines specific to open access, open data, and open education.

We found that while institutions want faculty to engage in public outreach, there are no explicit incentives or structures to assess contributions to public scholarship. Faculty were rewarded for traditional research outputs and citations. “Public” and “Community” are terms often mentioned in RPT documents, yet outputs of public scholarship were viewed as service contributions—not research. Only 5% of institutions mentioned “Open Access” in their RPT documents.

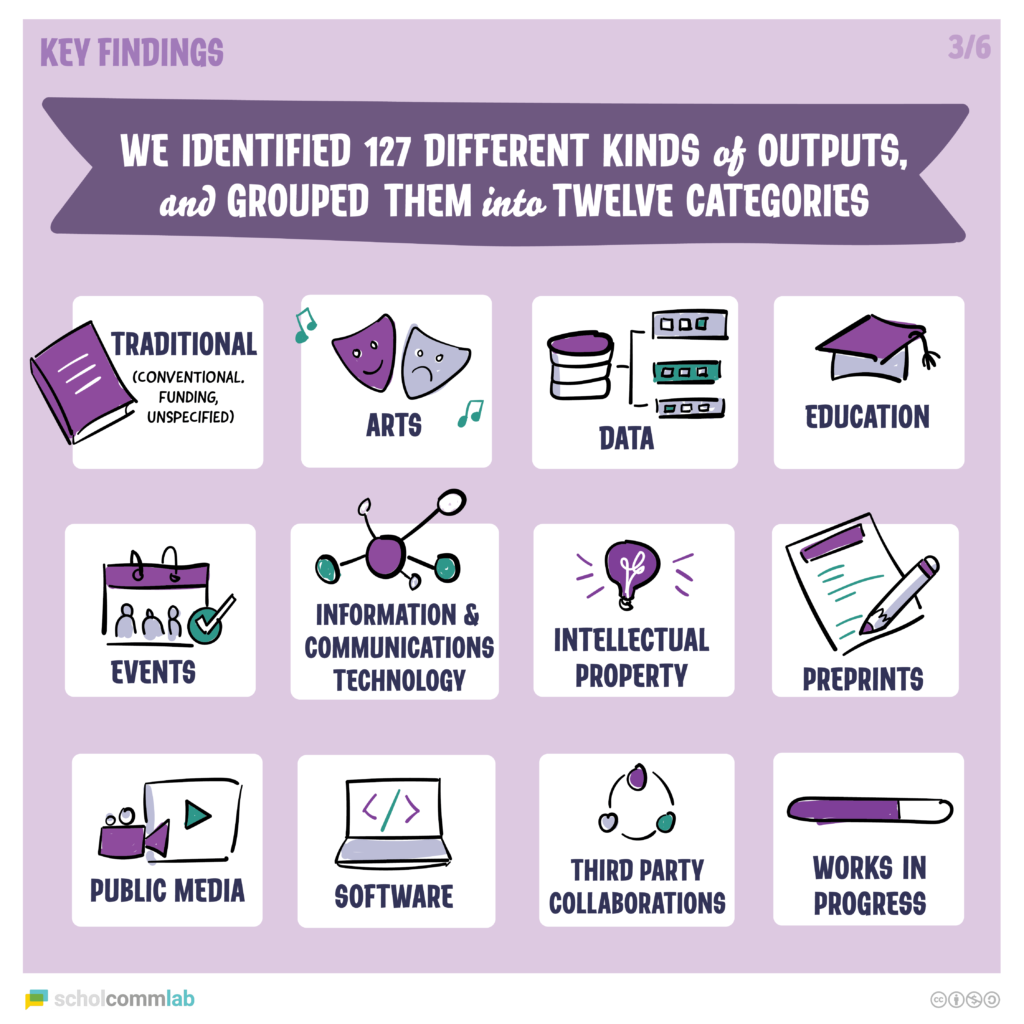

2. Nontraditional outputs are undervalued in the RPT process

So if there were no incentives for public scholarship, what kinds of outputs were mentioned in the RPT guidelines? In this book chapter for the Open Handbook of Linguistic Data Management (2020), we explored how non-traditional outputs are assessed in promotion and tenure.

Around 95% of institutions mentioned traditional outputs such as journal articles and book chapters. A diversity of research outputs, such as open data sets, software, or works in progress, were found in RPT documents extending across institution types and disciplines. While research activities are beginning to be recognized more broadly, our findings suggest that current structures of faculty assessment have yet to recognize the value of non-traditional scholarly outputs.

3. Definitions of “quality,” “impact,” and “prestige” are vague and circular

In this study (published in PLOS in 2021), we wanted to know how faculty define common research terms used in RPT documents.

We found that faculty often defined “quality,” “impact,” and “prestige” in circular and overlapping ways. Researchers and their colleagues applied their own—and often different—understanding of these terms. Interestingly, the varying definitions did not relate to age, gender, or academic discipline. Our findings suggest that we can’t rely on these ill-defined and highly subjective terms for evaluation.

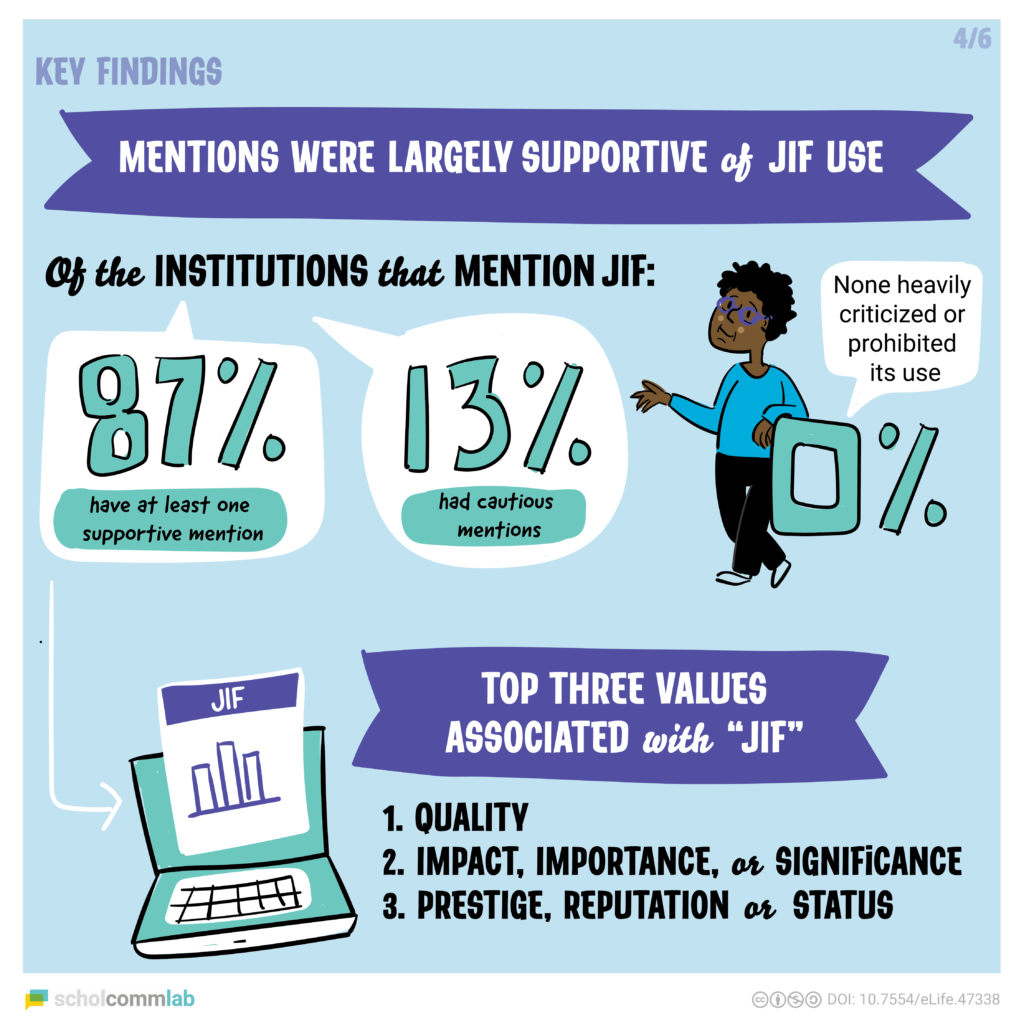

4. Impact factor persists in academic evaluations, despite its limitations

In a study published in e-Life in 2019, explored the use of Journal Impact Factor (JIF) in RPT documents. We analyzed how faculty defined and mentioned this controversial metric.

We found that only 23% of institutions mentioned “impact factor” explicitly in the RPT documents. While this may seem low, there were over a dozen terms used that faculty may associate with JIF without stating it explicitly, including: “prestigious journal,” “leading journal,” and “top journal.” Despite little to no evidence that JIF is a valid measure of research quality, there is still pressure to publish in high-JIF venues, which limits the adoption of open access publishing.

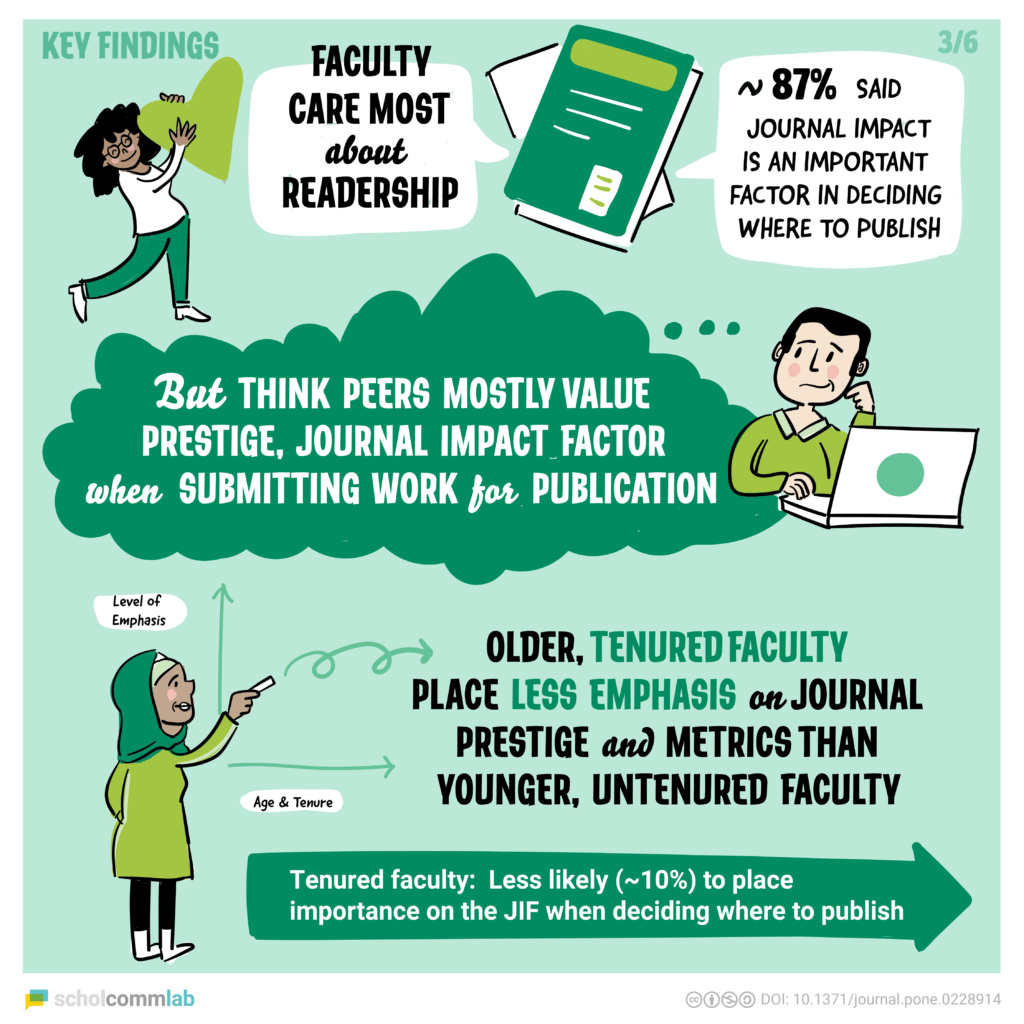

5. Faculty prioritize readership but think their peers value prestige

In another publication (published in PLOS in 2020), we examined what drives faculty to publish where they publish. The results were surprising.

While faculty prioritize readership when submitting their work for publication, they believe their peers are driven by journal prestige and metrics (such as impact factor). Next to readership, faculty cared about journal prestige and journals which their peers read. As for the RPT process, faculty thought they would be evaluated based on publication quantity and prestige—but this perception varied across age, career stage, and institution type.

6. Collegiality: a double-edged sword in tenure decisions

In our sixth—and final—publication (published this year in PLOS), we explored whether and how “collegiality” is used in the RPT process.

While “collegiality” was hardly ever defined in RPT documents, faculty we surveyed said that it plays an important role in the tenure process. Faculty from research-intensive universities were more likely to perceive collegiality as a factor in their RPT processes. Our results suggest that clear policy on “collegiality” is needed to avoid punishing those who don’t “fit in.”

Reimagining the future of review, promotion, and tenure

Since starting this project more than 5 years ago, our papers, blog posts, and tweets have been viewed and shared thousands of times. They’ve been covered in more than 15 news stories and blog posts, including in Nature, Science, and Times Higher Education. We’re hopeful that this wide visibility is a first step towards reimagining the RPT process so that it better reflects the types of scholarship that faculty members themselves want to see in the world.

So where do we go from here?

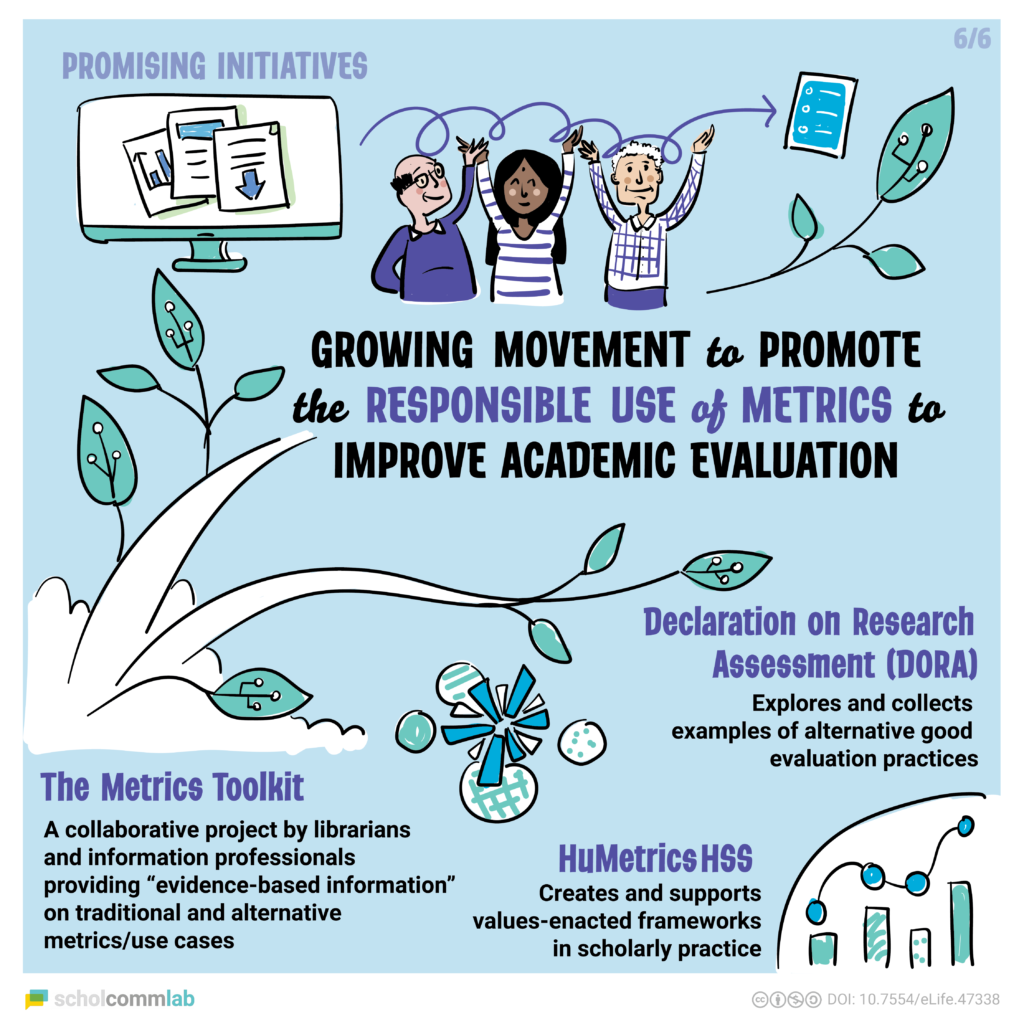

In all of our papers, the overarching conclusion is the same: There’s room for values-based assessments in the RPT process. We need to move away from measuring outputs or judging faculty by ill-defined concepts and move towards rewarding the values, processes, and ways in which people work. As we bring the RPT project to a close, we’d like to highlight two initiatives that have started to pave the way:

1. The Humane Metrics in the Humanities and Social Sciences (HuMetricsHSS) initiative generates and supports values-based approaches for scholarly life including review, promotion, and tenure. Their paper, Walking the Talk: Toward a Values-Aligned Academy, offers a set of recommendations for making wide-scale change to improve and strengthen the impact of all scholarly work. It features work by our lab, challenges academics to reform and rethink the RPT process, and suggests ways to align faculty expectations with institutional values. We invite you to read HuMetricsHSS’ work and reflect on the values that you would like to see considered in your institution’s RPT process.

2. The Declaration on Research Assessment (DORA) is a worldwide initiative recognizing the need to improve the ways in which researchers and the outputs of scholarly research are evaluated. They offer recommendations, resources, and case studies on best practices for research assessment. We encourage you and your institution to read, sign, and adopt DORA, to improve the evaluation of scholarly output.

Finally, we hope this work inspires you to reflect on your own role in upholding the status quo in the RPT process—and the power you have to change it. As Juan Pablo Alperin says “I invite you to begin a conversation by sharing with your colleague why access to research matters. Because it does.” By sharing our values and thoughts on the RPT process, we can cultivate an academic culture that rethinks research assessment for the greater good.

We would like to thank and acknowledge the team behind the RPT project which includes: Erin McKiernan, Meredith Niles, Lesley Schimanski, Carol Muñoz Nieves, Lisa Matthias, Michelle La, Esteban Morales, Diane (DeDe) Dawson. Without your contributions, this work would not have been possible!

For more about the review, promotion, and tenure project, check out the project website, visual overview, blog posts, and media coverage.

[…] Rethinking Research Assessment, ScholCommLab – https://www.scholcommlab.ca/2022/05/04/findings-from-the-rpt-project/ […]